Our client is an end-to-end IT services company that helps its clients by modernizing and integrating their mainstream IT, and by deploying digital solutions at scale to produce better business outcomes.

Business Challenge

Our client developed a system to help users estimate bid cost and make a decision about its pricing. They came to SoftServe to establish a performance testing process for its product to get performance metrics before the system went live, and to address any uncovered performance issues.

Project Description

In order to achieve our client’s goals and requirements for a reusable and scalable solution, SoftServe provided them with the following steps:

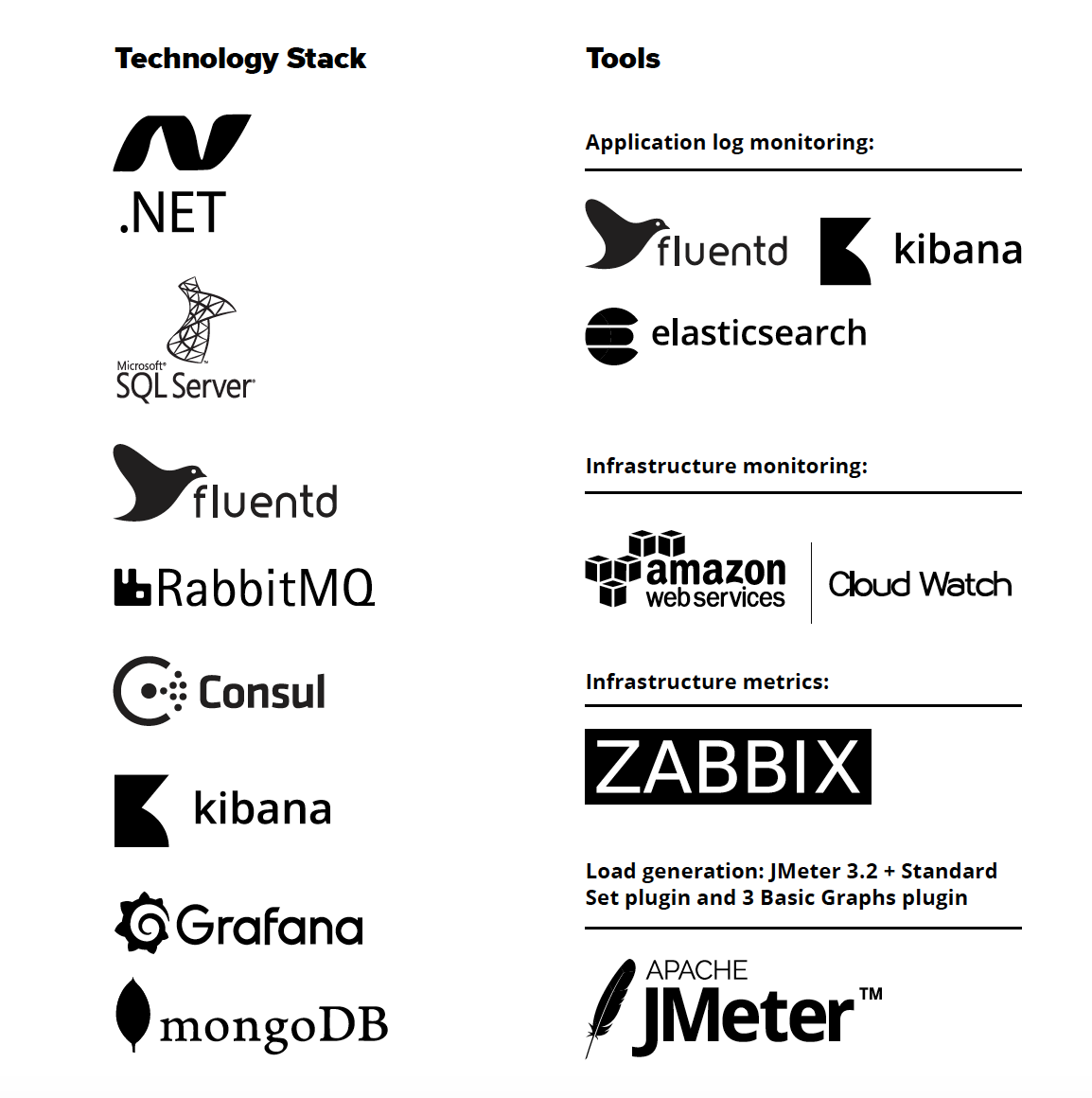

- Create a new testing environment using Ansible script and AWS CloudFormation template with the required resources allocation

- Deploy the required version of the application to the environment using AWS CodeDeploy service

- Adopt JMeter scripts to application changes (if needed)

- Run performance tests with predefined scenarios

- Collect performance metrics and write them down for further analysis

- Destroy testing environment

During the initial run, the test did not finish and displayed infinite execution time (on a single instance) as well as critical issues. After a migration to multi-node deployment and addressing the critical issues, SoftServe was able to execute the test and get baseline metrics. Each performance improvement iteration decreased total execution time.

Key challenges overcome during this project included:

- Complex business logic

- Numerous cross-referenced objects

- Complex business rules for the bid’s calculation

- Nine levels of nested objects

- Many new features added during the testing period

- Lots of changes in the API and UI between versions

Value Delivered

The performance testing results allowed our client to improve its product’s performance and have a scalable solution. They received insights into the weak points of the solution. SoftServe developed several test scenarios that reflected the most critical use cases and created an automated performance testing environment to minimize deployment time.

The baseline performance test showed the system can handle users with up to 100 commodities per user with a response time of up to 15 seconds. After the performance improvements, the system can comfortably serve users with 2000 commodities and still maintain an acceptable response time for most functions.

SoftServe continues to collaborate with our client’s development team for optimal operational response on detected issues.