DevOps requires a strong backend architecture along with expert knowledge around the technical components such as continuous integration and delivery, code as infrastructure, containerization, and micro-services. However, the practice of DevOps is only truly successful if a culture of “release early and often” is in place between business stakeholders, development teams, and operations support.

DevOps success stories are abundant in tech blogs and industry publications and has been credited with helping large enterprises launch new offerings, save money, and change the culture of their organizations.

DataOps

In an age where contextual and personalized experiences drive consumer engagement, collecting actionable data from customers and then using these insights to create a hyper-personalized experience is critical—but collection alone is not enough. How the data is ingested, normalized, checked for accuracy, managed, and made available to the organization is equally important.

Enter DataOps—the practice of using the proper set of tools, processes, and behaviors around working with data. It combines best practice elements from platform data science and data architecture with the corporate cultural paradigm of treating data as a first class-citizen.

Technology

When working with data, success is heavily dependent on having the appropriate set of integrated tools necessary to manage the data across its lifecycle and various workflows throughout the organization. Data exists in varying formats and states of readiness for consumption either by applications, data scientists, or business users. It’s vital to data’s integrity to have the right infrastructure to not only ingest and store data, but also normalize, scrub, aggregate, and vet the data. This infrastructure must also model and prepare for machine learning and provide robust security. To handle the vast amounts of data being captured by businesses today and optimize personalization at scale, businesses should look to the Cloud.

Your company may also consider unifying systems to position business logic closer to data sources for real-time analysis enablement—especially when it comes to use cases such as making recommendations based on activity, location, or even personality.

Processes and Policies

Working with data captured (or shared by your customers) carries a factor of mutual trust. Or if you’re doing business in Europe, data capture is a highly regulated practice under the new GDPR laws. In either case, there is a responsibility to avoid improper usage or the releasing of PII/PID information. DataOps practices define the processes around the use of data as well as policies for the safeguarding of data sets and individual sensitive elements.

Understanding the risks and ensuring data security is only a part of compliance. Companies must also ensure that policies are effectively communicated and enforced corporatewide. A proper DataOps strategy aids in that risk awareness.

Data for the people

The de mocratization of data will ultimately enable your company to truly transform the way it works. A well-known data scientist once told me:

The most important thing about working with data is knowing the right questions to ask.

I recall those words each time I have discussions about a company’s vast data lakes and data warehouses. In many cases, the data is not truly understood and there is no clear picture of what knowledge is hoped for through the capture and analysis of said data.

Humanizing data is an element of DataOps that makes analytics consumable and actionable, but the end goal of working with data is not to create a chart, graph, or dashboard—it’s about changing behavior. Humanizing the data means breaking through technology and process topics to really consider whose behavior we’re trying to change and why. I have found that workshops using Design Thinking techniques and exercises are a powerful way to get to the root goal around working with data. Whether you choose to have these conversations in an interactive workshop setting or just in an informal stakeholder discussion, it’s vital that these discussions happen.

A DataOps strategy will not be successful unless the human elements of the data have been explored and are understood by all stakeholders. Beyond knowing what questions to ask, it’s essential to know how to visualize the results in a way that makes sense to everyone as well as to know what actions should be taken based on data learnings. These discussions between those responsible for managing, interpreting, and acting will start to form a data-centric culture throughout the organization, transforming the way business decisions get made.

Conclusion

A solid DataOps strategy will yield similar (if not more) success than enterprises are seeing leveraging modern DevOps practices. The tools, processes, and culture of working with data will yield better insights into your customer’ s habits—changing the way you view, serve, and anticipate wants and needs.

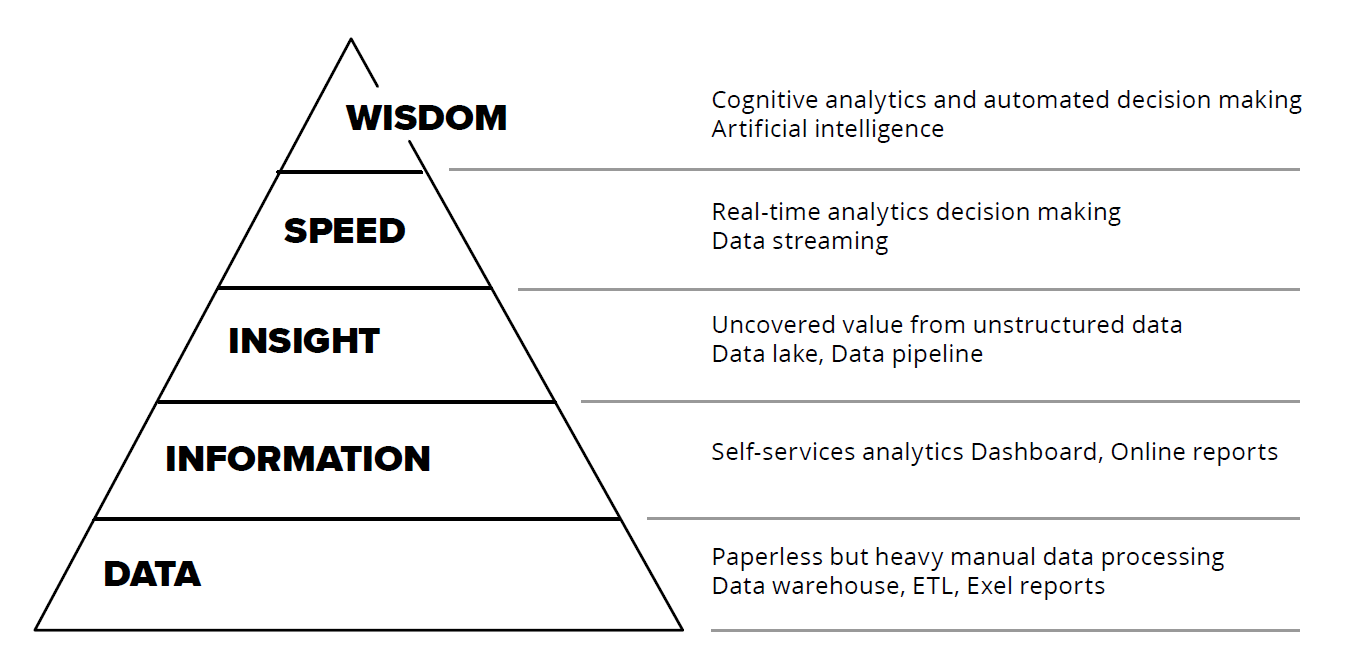

SoftServe’s Data Maturity model is a benchmark by which companies can assess where they are in their data journey. Where does your organization fall?

For more information, contact SoftServe today.