Don't want to miss a thing?

Keep Autonomous Vehicles in Check With AI-powered Simulation

Autonomous vehicle (AV) development is moving fast. By 2030, over 50% of vehicles will have ADAS technology. Yet, current testing methods could be improved by fully leveraging simulation capabilities to increase safety and development speed.

Many companies now use an AI-enabled simulation-first approach to test their AV systems. However, some are reluctant because of concerns about costs, regulations, transition time, integration, and more. For doubters, it's easier than you think.

This article shares a digital twin framework powered by Gen AI and SDG, backed by real-world use cases. With this method, you can build safer AVs, meet safety standards, and go to market faster.

Electronic systems in cars that make driving safer and easier by automating, adapting, or improving features. For example, adaptive cruise control, lane-keeping assist, and parking assist.

5 Reasons Real-World Testing Alone Falls Short

Developing AVs requires more than miles on the road for testing. Traditional testing is no longer enough because AVs must navigate highly complex environments, meet strict regulations, and ensure complete safety, which demands more advanced approaches. Challenges include:

- Expensive and time-consuming, especially in rare edge cases like animal crossings or low-visibility conditions

- Can’t reliably reproduce dangerous or uncommon scenarios

- Manually labeling and processing sensor data is slow

- Sensors, perception models, and control logic rarely interact seamlessly

- Evolving regulations like ISO 26262 require traceable, repeatable testing

Safer Driving in 90 Seconds

SoftServe’s simulation-first framework helps AVs navigate the unpredictable — before they hit the road.

Simulation-First Approach

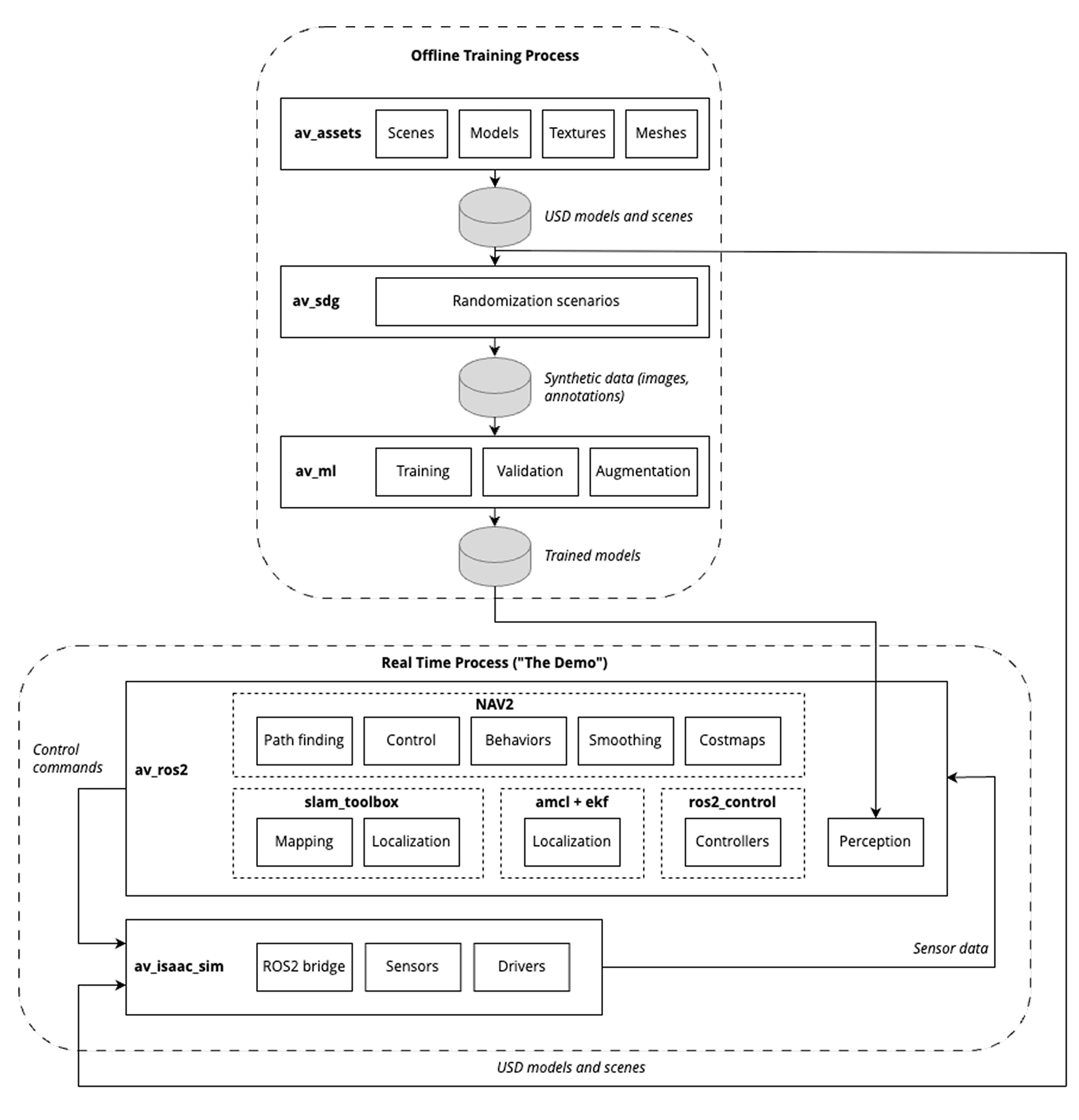

SoftServe's AI-powered, simulation-first solution is built on digital twins and synthetic data generation. Integrated with Gen AI, CI/CD pipelines, and hardware-in-the-loop validation, this approach enables faster prototyping, robust testing, and safer deployment — before your vehicles hit the road.

It supports the full lifecycle of AV development — from perception model training to control system validation — within a modular, testable architecture.

Key principles that define the framework:

Simulation-first methodology:

Digital twins of vehicles and environments enable safe, repeatable, and scenario-rich development.

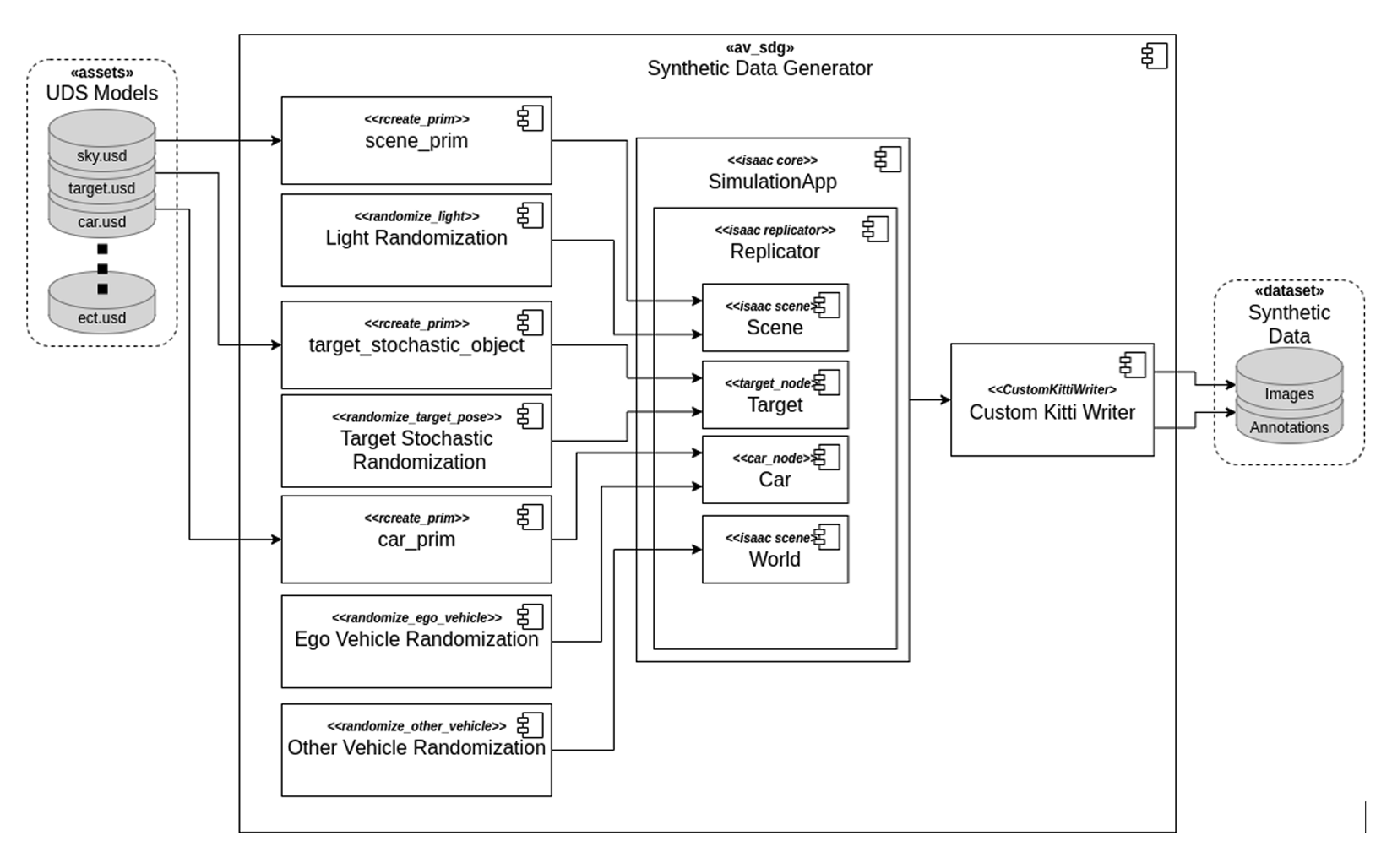

Synthetic data generation (SDG):

High-fidelity, labeled data supports training and validating perception systems under varied and rare conditions.

Closed-loop testing:

Integration of perception, planning, and control components in simulation ensures realistic behavior validation.

Hardware-in-the-loop (HIL):

Embedded systems can be tested with real hardware in a framework-agnostic approach, allowing full system emulation.

CI/CD integration:

Automation pipelines trigger scenario-based testing with traceable builds and performance monitoring.

Interoperable toolchain:

Built on NVIDIA Isaac Sim, Replicator, TAO Toolkit, and ROS2 — while remaining adaptable to external platforms like CARLA and NVIDIA Cosmos for extended scenario generation and world modeling.

Benefits

- Accelerated time-to-market

- Early-stage fault detection

- Lower costs

- Improved safety

- Continuous performance monitoring

- Scalable and repeatable

- Data-rich training pipelines

- Aligns with safety standards

This architecture lays the technical foundation for AI-driven development, Gen AI-powered edge-case generation, and safety-first automation — detailed in the following subsections.

Synthetic Data and Gen AI

Training perception models to recognize complex, unpredictable, or rare scenarios requires data that real-world testing simply can’t provide at scale. That’s where synthetic data generation (SDG) and Gen AI come into play in our framework:

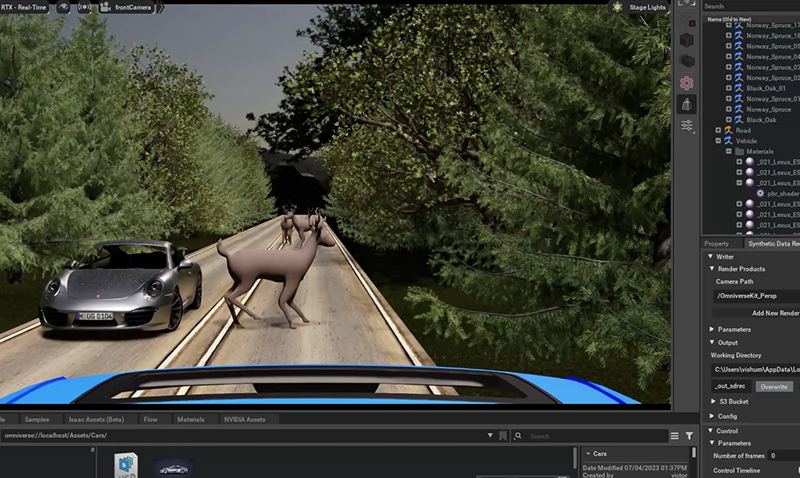

NVIDIA Replicator makes high-fidelity sensor simulation easier, generating RGB, depth, LIDAR, and radar data across different lighting, weather, and traffic conditions.

Delivers ground-truth labeling at scale (bounding boxes, segmentation masks, and optical flow) quickly and accurately.

Gen AI improves test scenarios using large language models to script complex traffic events, rare animal interactions (like a deer crossing with occlusion), or tricky adversarial conditions that would otherwise be hard to program manually.

Synthetic datasets are randomized to account for:

- Object positions, speeds, and orientations

- Pedestrian behaviors and vehicle occlusions

- Environmental variability: rain, fog, glare, and shadows

- Sensor placement changes and field-of-view coverage

Digital Twin: The Foundation of Safety

At the heart of our framework is the digital twin: a virtual replica of the vehicle, sensors, and environment. This lets you test decisions, detections, and controls in realistic, high-risk conditions before deployment.

We model:

- The way the vehicle moves, such as braking, accelerating, and handling

- Where sensors like LIDAR, radar, and cameras are placed, and what they can see

- Changing environments with traffic, pedestrians, road edges, and animals on the move

- Roads and infrastructure, such as rural two-lane roads, faded lane markings, trees blocking views, and uneven shoulder widths

This digital twin supports full system-level integration testing and allows:

- Co-simulation of perception, planning, and control subsystems

- Testing of complex scenarios like animal crossings with limited visibility

- Continuous validation of AV behavior under ISO-aligned safety protocols

Combined with SDG and Gen AI, the digital twin transforms simulation from a design tool into a safety assurance engine, helping you anticipate edge cases, test interventions, and meet regulatory demands — faster and more reliably than field trials.

Hardware-in-the-Loop and Continuous Testing

Simulation alone isn't enough — real-world AV performance depends on how software interacts with embedded systems. Our framework closes the gap between virtual and physical testing through hardware-in-the-loop (HIL) and full CI/CD integration.

Using ROS2 with the Nav2 stack, we connect real-time control software and physical components directly to the simulation environment. This enables:

- Embedded hardware validation with real processors and control boards

- Real-time system behavior monitoring, including latency, signal loss, and decision loop timing

- End-to-end testing from perception → planning → actuation pathways

To keep things moving quickly, we integrate this into a continuous delivery workflow:

- Software updates are automatically committed, built, deployed, and tested

- Every model or logic change triggers scenario-based regression tests

- Failures are logged with telemetry data and automatically reintroduced into the training cycle

This loop guarantees that every update — from perception tuning to navigation logic — has been verified against repeatable, traceable simulations before being considered production-ready.

Interoperability with AV Tech Stack

Autonomous vehicle systems are complex, with a mix of sensors, third-party modules, and constantly evolving software. Our framework works with all these components, making it adaptable for different industries and platforms.

Here’s what makes it stand out:

Multi-sensor support:

Handles LIDAR, radar, stereo/mono cameras, depth sensors, and inertial units, all with synchronized fusion.

Modular architecture:

Easily test, update, or swap out components like perception models, control algorithms, and SLAM modules without hassle.

ROS2 compatibility:

Built using ROS2 and the Nav2 stack for smooth plug-and-play integration with popular AV development tools.

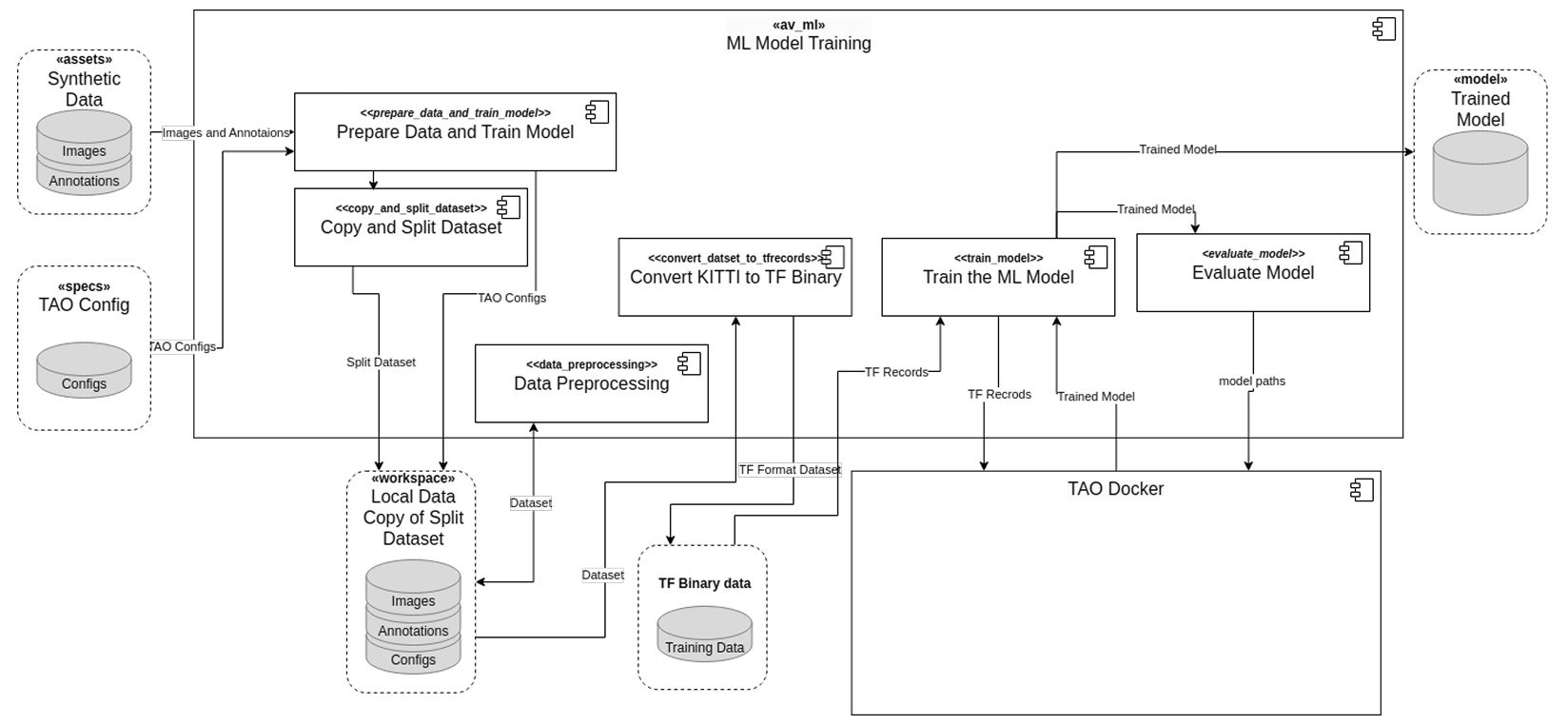

TAO Toolkit integration:

Bring your own model (BYOM) or use NVIDIA’s pre-trained perception models with transfer learning.

Open API access:

Connects to external analytics, dashboards, or monitoring systems using standard protocols.

This flexible structure allows the framework to scale from early-stage prototypes to full-stack AV systems designed for:

- Urban and highway passenger vehicles

- Rural or low-visibility driving conditions

- Complex edge-case environments requiring high-fidelity scenario validation

Whether you're validating a perception pipeline or deploying a closed-loop obstacle avoidance system, this architecture ensures all components — from sensors to control software — work cohesively across your AV development lifecycle.

Applied Simulation for Safer Autonomous Driving

Use case: Predict the unpredictable

We built a perception accelerator to help avoid deer while driving in rural areas. Using Isaac Sim and Replicator, we created randomized synthetic datasets — changing environments, camera angles, lighting, and animal positions. Models were pre-trained with NVIDIA TAO and tested in simulation. Results: Better detection in tricky conditions and less time spent on manual data collection.

Use case: Reality check with CARLA

We used the open-source CARLA simulator to put AV decision-making to the ultimate virtual road test. We recreated thousands of kilometers of simulated driving, complete with tricky overtaking scenarios on rural roads and under extreme weather conditions. By automating these simulations with ROS and Python APIs, we cut real-world testing by 40% and improved our models.

Using NVIDIA Cosmos, we generated photorealistic variants of key clips — altering rain, fog, and lighting — so CARLA’s physics-based environment drove even broader benchmarking and closed the sim-to-real gap.

Build Better AVs with Simulation

Simulation-first development accelerates timelines and reshapes how safety, quality, and scalability are achieved in autonomous vehicle programs. By validating critical AV behavior in digital environments, teams gain earlier insights, reduce reliance on physical testing, and deliver safer systems with greater confidence.