Don't want to miss a thing?

Workshop Learns That Three Pillars Are Essential to a Successful Data and AI Strategy

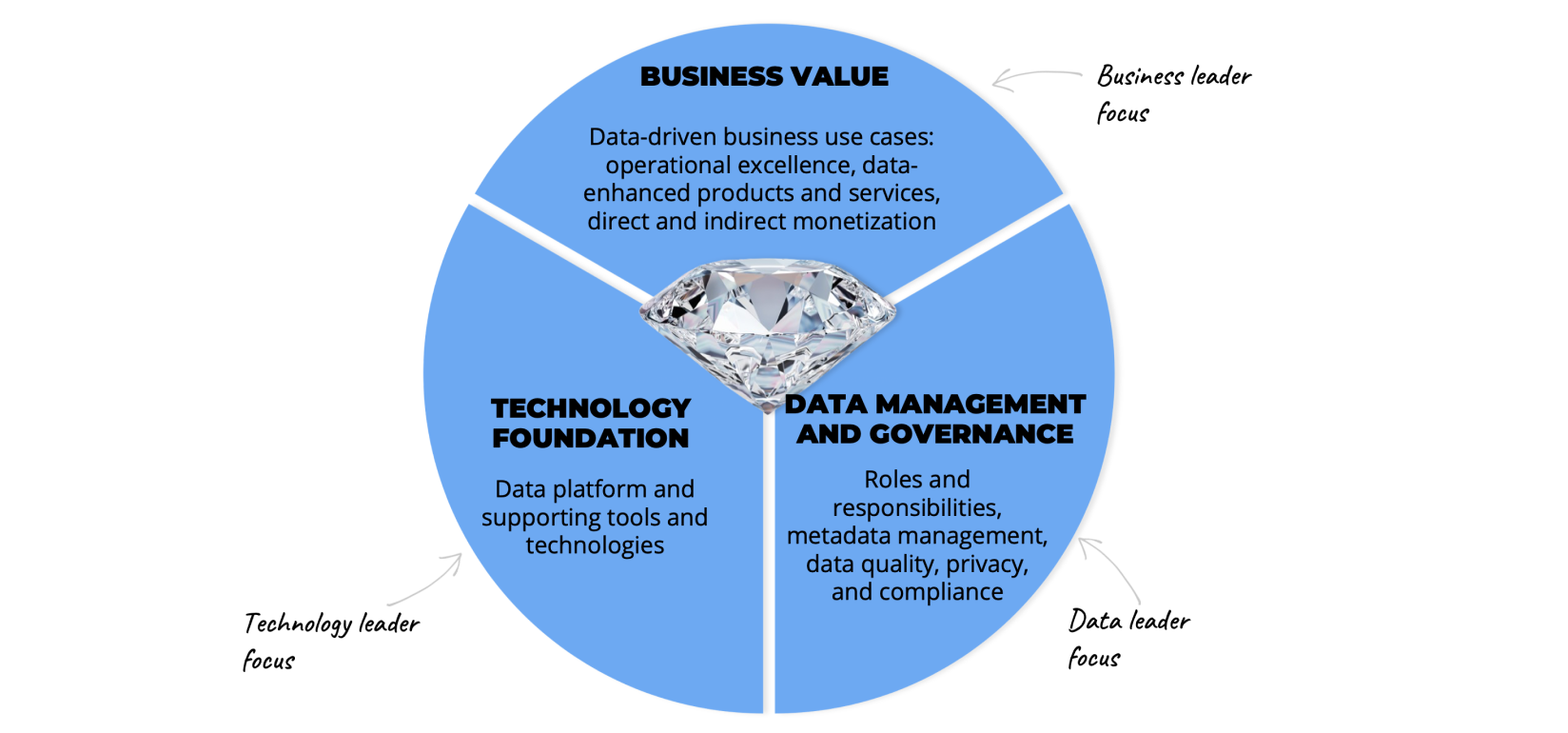

Businesses that want to harness and deploy data as part of a focused strategy to enhance competitiveness and effectiveness must first establish three critical foundational pillars.

Broadly speaking, the first pillar is ensuring a solid technology foundation. The second is implementing robust data management and governance policies. Third is identifying the business value to be achieved.

This was the basis of the advice I shared with attendees at the recent client workshop we held with Google Cloud at their London offices. Later, I will share additional insights from the workshop on how unified data and AI platforms accelerate and scale those data capabilities, along with tips on how to identify value and ROI opportunities.

Once firms build the pillars, they can deploy AI tools (like Generative and agentic) that will make a significant difference to so many areas of the business. These can be the automation of customer engagement or more exciting, interactive customer experiences. They can also be capabilities like digital supply chain management and more informed and sophisticated executive decision-making.

There is no limit to the areas of a business that can be modernized, automated, and made more intelligent and efficient once the right foundations are in place. But there is already evidence that many firms have tried to move AI forward without these pillars and have paid the price.

Poor priorities

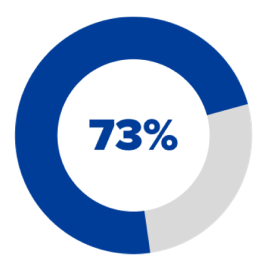

A recent study we commissioned from Wakefield Research, involving 750 global organizations, found that 73% of business tech leaders admitted to poorly prioritizing IT investments. They were allocating funds or talent to Gen AI activities instead of more valuable data and analytics initiatives.

Strategic disagreement

|

Poor prioritization

|

Lack of value understanding

|

Need for strategy

|

|---|---|---|

|

|

|

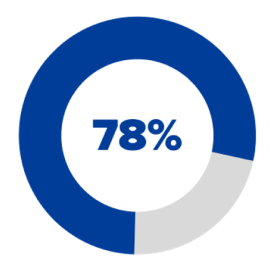

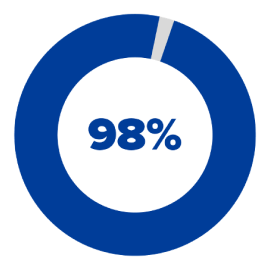

| 73% of business tech leaders believe their company has allocated funds or talent to Gen Al trends at the expense of more valuable data and analytics initiatives. | 78% of VPs and 61% of directors - but just 44% of those at C-level - believe their organization's investment priorities are negatively impacted by leaders not fully understanding how data can generate value. | Nearly all respondents believe a data strategy update is required before being able to gain the full advantages of Al. |

Digging deeper, we discovered this was often caused by a disconnect between those on the board and those at the technology coalface. The research showed that 78% of VP-level staff and 61% of directors believed that their organization’s IT investment priorities had been negatively impacted by leaders not fully understanding how data can generate value. But only 44% of those at C-level felt the same.

It was therefore not surprising to find that 98% of those polled now believe that a data strategy update should be a prerequisite step before obtaining the full advantages of AI.

PoC purgatory

There are multiple examples of these failures and how they occur. One is what we call “AI proof of concept (PoC) purgatory” where a firm has focused on a quick win with cheap AI innovation. It starts with an excessively simple PoC and is led astray by an over-optimistic results assessment. As a result, what was deemed a successful PoC struggles to get into production because it has neglected risk, compliance, scalability, or cost challenges. Or it has had problems with all four.

However, a firm that starts with a data-driven business use case, operational excellence, and data-enhanced products and services will be able to identify direct and indirect data monetization paths that are more likely to deliver business value.

Data ghost town

Another example is where a business has become what we call a “data ghost town.” This is where a firm has a technologically advanced, but underutilized, data platform. It often means the technology solution has been developed in isolation from real needs, without accounting for pressing business issues, or the way the organization works. It means that, even after implementation, business units are still complaining about data issues and accessibility.

This situation can be avoided by putting in place a sound technology foundation, along with the required supporting tools and technologies. It will avoid employees saying:

Paper piles

A further common cause of failures to maximize data opportunities is what we simply call “piles of paper.” This is where an enterprise has created data management policies on paper but has failed to activate them. It means that what should be important data management tools have instead become a useless bureaucracy. The root cause of the problem is often the complexity of integrating well-intentioned, but generic policies into the day-to-day routines of both IT and business users. It can end up in a situation where, as one IT executive told us:

However, this can be avoided by the establishment of structured data management and governance policies with clear roles and responsibilities. Policies where metadata management, data quality, privacy, and compliance are enshrined in everyday working practices.

Holistic approach

When a business adopts these three golden pillars — supported by clear business leadership, strong technology leadership, and embedded data leadership within a holistic approach — it establishes solid foundations for future growth and success.

Success pattern: holistic strategy

It means data can be sliced and served to be delivered when and where it is most needed from the cloud infrastructure and data platform. This process runs through structured data governance and business enablement protocols to deliver real and measurable business value.

Data at scale

Once those foundations are in place for a modern data platform, more attention is needed as, according to Google Cloud’s Data Analytics Engineer Ravish Garg, most organizations have not solved how to create value from data at scale.

Pillars for building a modern data strategy

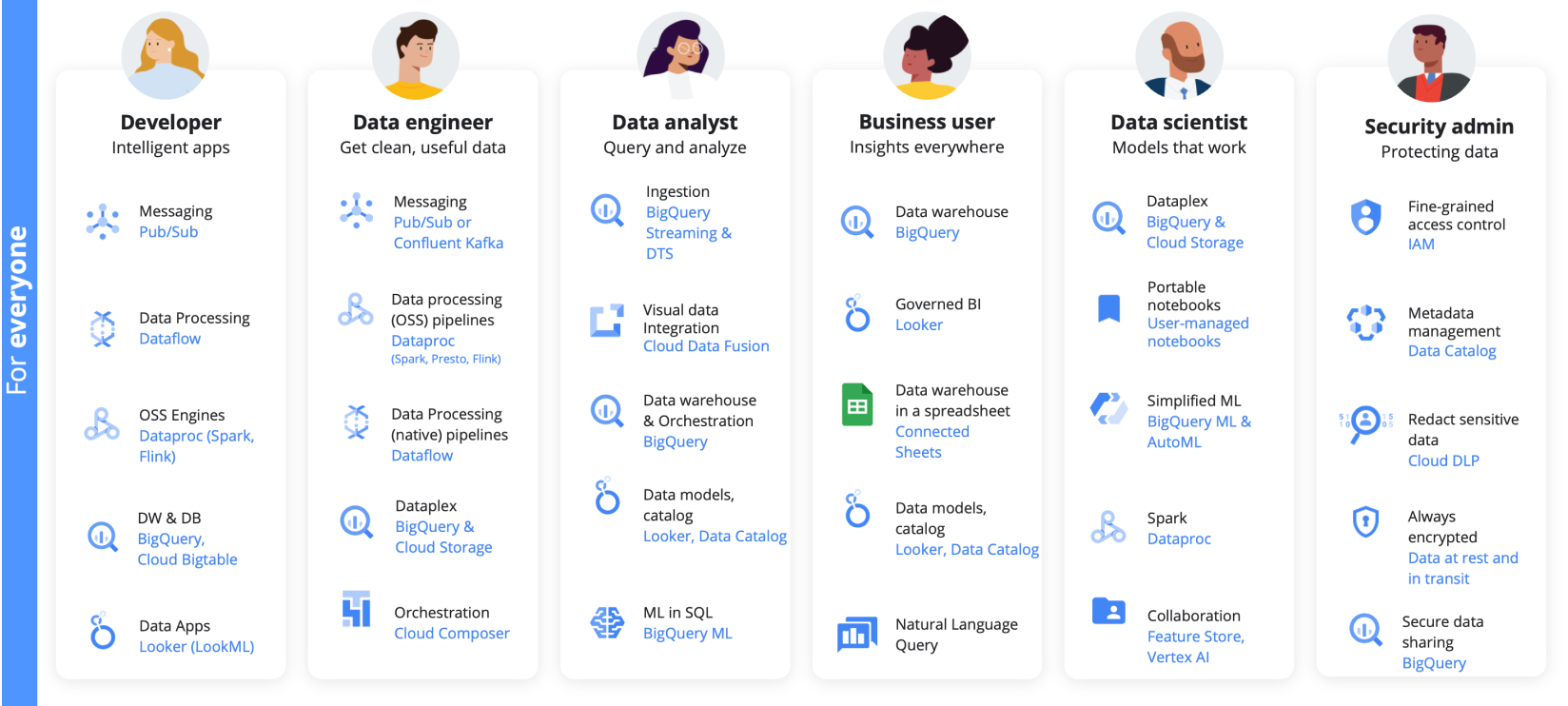

He told the workshop that firms need to work out that the purpose of a data strategy is to enable an organization to achieve its mission and objectives using data to deliver a competitive advantage. This can only happen with productive user experiences that enable all users to access and create value from relevant data. It requires principles and practices to ensure data can be published, discovered, built on, and relied on. Further, there must be a data ecosystem with a unified, open, and intelligent platform, with end-to-end data capabilities for all users and needs.

Organizations need to understand that data is big and multi-format, which requires scale to manage in both structured and unstructured formats. It must handle real-time streams and data at rest while operating seamlessly across cloud platforms and on-premises locations.

Making it work

Garg said that because of these factors, and as data requires more than SQL to be an effective asset, firms must also be able to deal with stream analytics, events, and other data-driven applications to ensure it is accessible to all who need it.

One practical example Garg gave of creating a collaborative workspace for data practitioners to accelerate data-to-AI workflows was with Google Cloud’s BigQuery console. He mentioned that over a million queries run from BQ’s SQL Workspace every day.

BigQuery Studio expands on SQL Workspace to be a one-stop shop for all data practitioners, including data analysts, data engineers, and data scientists. In BigQuery Studio, not only do you get a great SQL editor, but you also get access to Python Notebooks backed by BigQuery DataFrames. This Python library provides a familiar Pandas interface that leverages the power of BigQuery to work with large datasets.

It’s not just multiple language support, BigQuery Studio is a fully integrated development environment (IDE) that includes source control and versioning. He said that it’s now in preview without an allowed list and invited attendees to give it a try.

Sophisticated needs

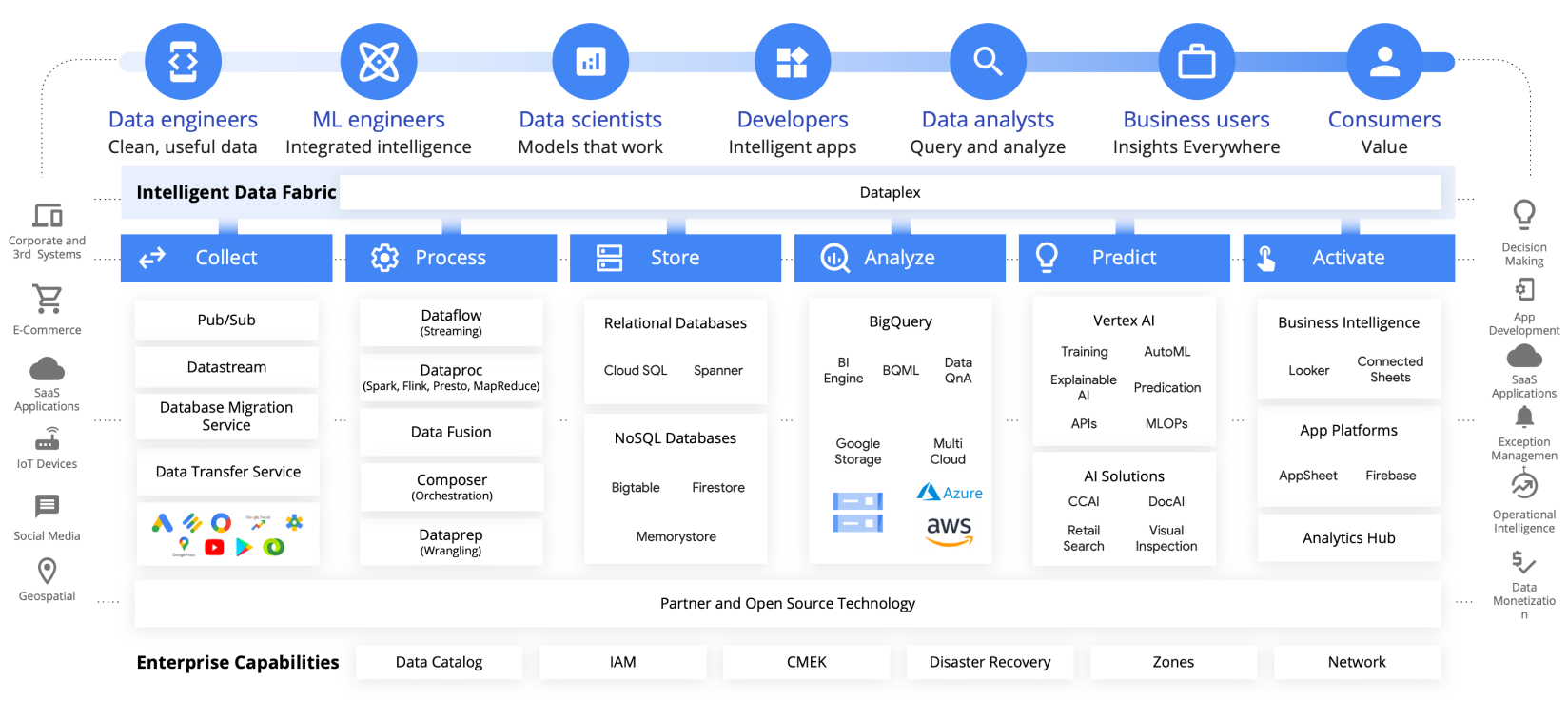

A Data Platform that supports sophisticated needs

This combination of factors and requirements means enterprises must have a data platform that meets sophisticated needs. He said that it must be able to collect, process, and store data, and then analyze, predict, and activate value for both business users and consumers.

Embracing this complexity, which meets the demands of data and ML engineers, data scientists, and developers to deliver those end-user needs, requires the core capabilities of Google Cloud’s unified platform for data-driven innovation. It includes unified engines for unified data and experiences with collaborative workflows.

Garg stated that a unified data and AI platform on Google Cloud can handle limitless data, support all AI/ML and analytical workflows, and, with built-in BI and other applications, create a partner ecosystem for everyone. It is cost-effective, highly productive, easy to secure, and fully compliant.

Building from the bottom up, the Google Cloud infrastructure supports an AI foundation, with multi-modal storage and multiple engines, ensuring clear results at the front end with a unified UX.

AI-powered data

Once this is all in place, Garg said the future of AI-powered data will be autonomous and agent-driven. It will feature a family of data agents — including engineering, governance, data science, and conversational analytics — working together to enhance customer inquiry responses. This will transform current pedestrian and one-dimensional replies into instant personalized results powered by sentiment analysis driven by large language models.

A platform for all users and intents throughout the data life cycle

It will enable businesses to demonstrate enhanced consumer understanding and demand sensing, ensuring smart consumer segmentation and accelerated qualification. This will lead to improved product recommendations that produce increased loyalty from better consumer lifetime value optimization.

ROI techniques

Having built the foundation pillars and a unified platform and data ecosystem, it is important to understand and evaluate potential projects that will deliver business value. My colleague Michal Bochenek, SoftServe’s GCP Practice Director for Big Data and Analytics, explained during the workshop that this can be achieved by learning ROI and COI estimation tips and techniques for new data initiatives.

When analyzing these projects, businesses must consider the balance between trying to earn more or spending less. In the former, that could be data monetization and revenue enablement growth, or improved sales targeting, higher conversion rates, and increased sales velocity. In the latter, it could involve reduced infrastructure spending, improved risk mitigation, and cost avoidance. It may also include general operational efficiency gains through time and money saved at scale.

Business value

Not optional

The first point to remember, he said, is that if an initiative is not trackable to an impact on a company’s financial statements, it does not represent value. Secondly, everything must be measurable as “quantification is not optional.” Expenditures and savings must be calculated, with both expected TCO reductions and revenue growth expectations understood as a percentage of what is to be invested in a project. That allows the economic impact of data integration to be clearly understood. This will only happen if the business is fully involved in the project planning, as that is where most value is found.

Some useful tips to make this work, Bochenek continued, were to start measuring the things that matter now, so you cannot only justify the change but calculate the improvements later. Then allocate enough time to collect all the relevant information and ask tough questions of the business users. Lastly, always be prepared to learn from others’ mistakes so that you can understand the cost of action (investment) vs. the cost of inaction.

All of these will make sure you develop a well-established plan from a solid business case, as no business case project should be justified solely based on migrations or modernizations.

Conclusion

To sum up, this might sound like a lot of work to be done. But the results of our research show that it is time and money well spent when you consider the potential waste if projects are tackled the wrong way around. It is why we at SoftServe provide the expert support clients require when embarking on these journeys and why Google Cloud considers us a Premier Consulting Partner.

It is why we have developed sophisticated tools like our Google Cloud Jumpstart program and our Intelligence Framework for Google Cloud, which is specifically designed to help clients reduce risk and rapidly develop, deploy, and scale AI solutions. If you would like to find out more, see a demo, or speak to an expert, just get in touch.

Start a conversation with us