Don't want to miss a thing?

There are many tools available today that are designed to automate security checks, particularly these open-source AWS security tools. However, I think that some people rely too much on tools, as if conducting an AWS security assessment is the same as converting the scanner’s output into a fancy-looking report. Nothing could be further from the truth.

Let’s take a look at what scanners are missing, and why I think tools can’t replace a human assessor in performing an effective AWS security assessment.

Scanners don’t know the big picture

First of all, popular security scanners such as Prowler and ScoutSuite aren’t context-related.

Imagine a company which stores customer Personal Identifiable Information (PII) files in several S3 buckets. The buckets aren't publicly accessible, and use SSE-S3 as a default encryption (scanners will not raise an issue here, since default encryption is enabled; but since SSE-S3 uses S3-managed keys, every principal in the AWS account with S3 permissions will also be allowed to encrypt/decrypt objects inside the bucket).

Additionally, an AWS Identity and Access Management (IAM) policy that grants full access to any S3 action on any resource (think about AWS-managed AmazonS3FullAccess policy) is attached to dozens of IAM users and roles. That creates a big security concern, because compromising a low-privileged internal principal may end with sensitive information disclosure (lack of restrictive guardrails protecting sensitive buckets), which may have dramatic consequences for the company.

But such a threat most likely won’t be raised by a scanner, because it simply isn’t aware of which assets should use additional protection mechanisms. On the other hand, an assessor who knows where sensitive data is stored can easily identify potential threats and recommend multiple security controls to mitigate them (such as using SCP or a restrictive bucket policy).

More findings don’t necessarily mean better results

Furthermore, scanners can flood you with false positive indications. It's easy to run a scanner, but a report showing hundreds of high- and medium-severity issues is useless. Un-customized scanners tend to report multiple issues, which may be not applicable in your case.

For example, they can be findings related to a lack of restrictive password policy, even though you don't have any IAM users, or an absence of enabled access logs, though you catch them using third-party solutions. A security team will then need to filter-out relevant issues one by one, which can be a very time-consuming task in complex environments.

In the worst-case scenario, the report review task will get stuck in a backlog, leaving your team unaware of issues that really matter.

Scanners may not cover everything

Another consideration is if you can rely on a scanner's output. In other words, do you need to be aware of what security checks are missing in your scanner?

A good example are hardcoded secrets, which may open many doors to unauthorized principals. Scanners usually cannot detect a login and a password passed in instruction in EC2 user data or in ECS task definition's environment variables.

Hardcoded secrets can be a real game-changer in an attacker’s hands. Just imagine a compromised low-privileged user/role, which is allowed to read credentials to external CI/CD pipeline with administrative permissions to the entire organization. Unfortunately, scanners are blind to those types of issues.

Indeed, some scanners (especially open-source scanners that are no longer maintained or only occasionally updated) lack rules against some new AWS services or newly introduced features. You need to have a full inventory list of your cloud assets to identify those scanner's gaps to address them, either manually or by using custom scripts.

Skipped data flows and relations

In multi-account architecture, it can be often observed that some accounts have many relations either on the identity level (such as an IAM role which can be assumed by other accounts in your organization or by third-party) or on the data level (network traffic peered over VPC peering). But scanners are not aware of the big picture of your infrastructure and usually are blind to those potential attack vectors.

With that said, you can be unaware that access to your critical assets is shared via IAM roles or resource-based policies to a third-party which is no longer used. It's even more scary when you realize that 82% of companies unknowingly give third-parties access to all their cloud data.

Similarly, access can be given on the data level. An attacker who has compromised an EC2 instance in a development account can access production database via VPC peering or non-restrictive internal proxy. Not to mention, of course, the entire class of supply chain attacks (watch my example of releasing a malicious NPM package and using it in the Lambda function).

Threat modeling to the rescue!

So how can we address these issues? How can an assessor learn the big picture of a cloud infrastructure to provide a valuable AWS security assessment? I find it very useful to start from a threat modeling session. While there are multiple approaches, I like a simple session of whiteboarding with customer's representatives. In times of remote work, you can find various tools allowing you to do the same virtually.

I use Lucid Spark. The goal of such session(s) is to:

- Understand the usage context of cloud assets,

- Identify critical assets and how they are used (who need access, what level of access, how assets are processed and so on), and

- Identify all potential threats and attack vectors

When you're leading such a threat modeling session, it’s important to cover all of the security-relevant cloud infrastructure areas. A good list of questions to ask and topics to consider is given in Marco Lancini's spreadsheet, "What to look for when reviewing a company's infrastructure".

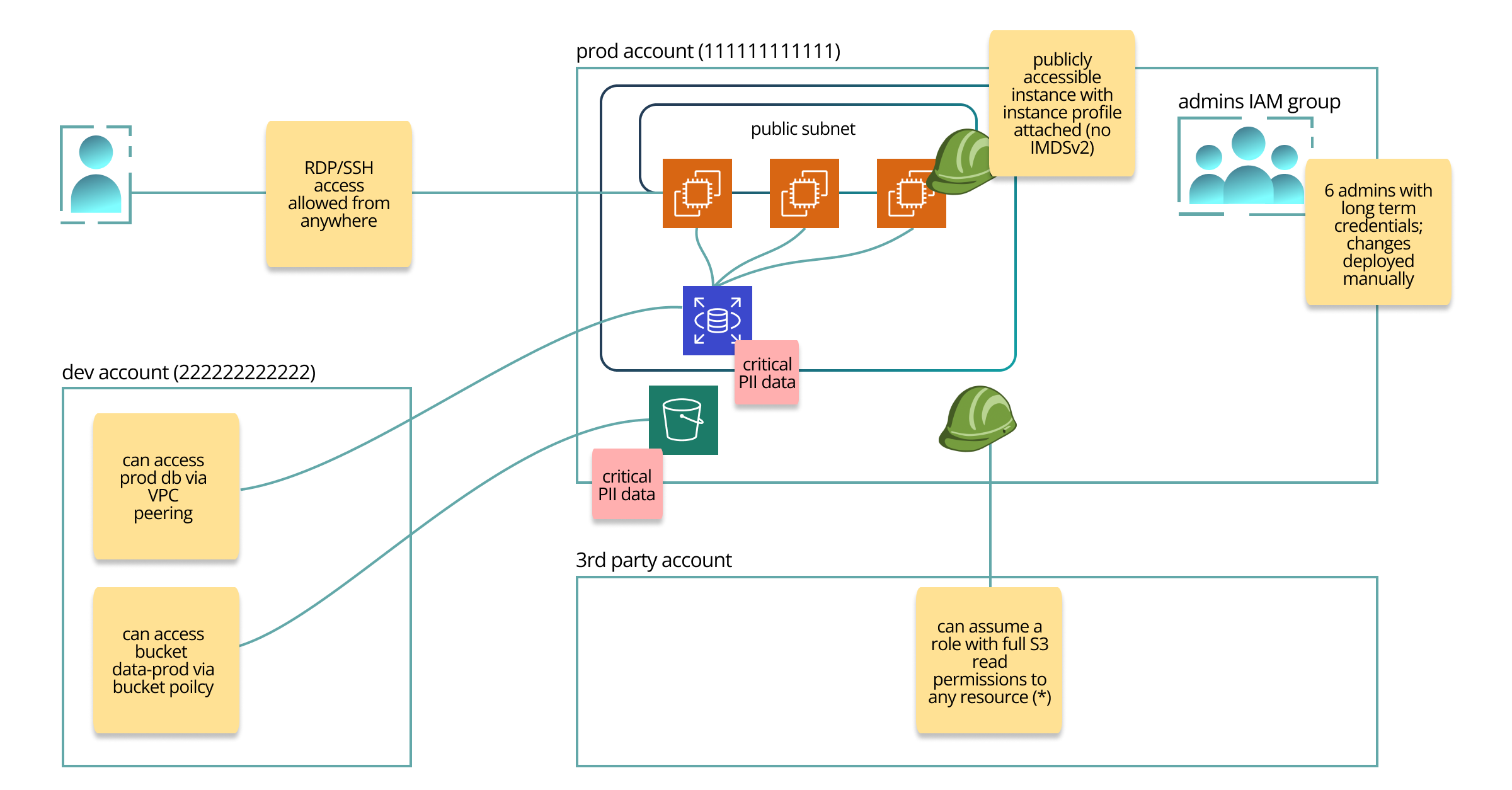

An example output of such session may look like this:

Of course, it’s just a sample to give you an idea how it may look like. Usually, I try to upload the real customer’s architecture diagrams and then add threats and comments using sticky notes, highlight relations, and so on. After discussing (and understanding) every piece of the system and identifying potential threats, you’re ready to begin the assessment, which most likely will result in increasing your initial list of threats.

Another benefit from a cloud infrastructure threat modeling session is that the attendees who look at the big picture of their own system can identify security gaps by themselves. We tend to be project-oriented and forget how our initially good-looking solutions can impact the security of the whole organization. That’s also a good reason to perform such an exercise with your team internally before starting any kind of project.

Summary

Without a doubt, security tools are very helpful in automating security checks, but they should be treated as a complement only, and never as a replacement for the assessor. A lack of understanding a big picture of a cloud infrastructure, missing a list of critical assets, and potential attack vectors may end a cloud security assessment with a huge report, full of false positives.

Consequently, it will waste an engineers’ time addressing irrelevant issues instead of mitigating serious threats. That's why a deep understanding of what you're going to assess is a fundamental step, which should start every assessment.